With Great Data, Comes Great Responsibility II

Privacy preserving machine learning (PPML) with TEE and MPC.

Recently the Bipartisan Senate AI Working Group called for a staggering $32 billion per year funding for AI safety research, emphasizing privacy. A study done by Cisco reveals that 1 in 4 organizations already banned generative AI, and 40% have already experienced AI privacy breaches. These events underscore the need to address data and model security risks in AI.

As a leader in privacy-preserving machine learning (PPML), Bagel's lab has been advancing the field significantly. Building upon our research of PPML approaches using privacy-enhancing technologies (PETs) like differential privacy, federated learning, ZKML, and FHE, we have deeply investigated two additional technologies gaining adoption: trusted execution environments (TEEs) and secure multiparty computation (MPC).

See here for the part 1 of this series.

In this article, through a blend of theoretical insights and real-world examples, we will demonstrate how TEEs and MPCs can be harnessed to build robust, secure, and scalable PPML solutions while navigating the complex regulatory landscape of AI safety and privacy. We will discuss how these techniques can be utilized for your use case, compare them to the discussed privacy technologies in our previous research post, and analyze their pros and cons.

And if you're in a rush, we have a TLDR at the end.

Trusted Execution Environments

Trusted Execution Environments (TEEs) are technologies for hardware-assisted confidential computing. TEEs enable the execution of isolated and verifiable code inside protected memory, also known as enclaves or secure worlds. TEEs ensure that code and data within enclaves are protected from external threats, including malicious software and unauthorized access. This isolation is critical for maintaining the integrity of machine learning models, especially when deployed in untrusted environments such as edge devices or cloud platforms. By using TEEs, developers can safeguard the intellectual property embedded in their models and be sure that the models produce reliable and tamper-proof results.

General View of TEE Components

In a TEE, the user that wants to use the enclave first establishes a secure connection (attestation) using a key generated by a secure processor. The user can verify the integrity of the enclave through an attestation process that is certified by the manufacturer. If the attestation is successful, the user can trust the enclave to execute code and store data securely.

The overall security also depends on the underlying hardware security features, such as memory encryption and isolation. Hardware-based isolation mechanisms ensure that the code and data inside the enclave are protected from any unauthorized access that resides outside the enclave. The chip containing the enclave is designed to resist tampering, and any hardware tampering will make the enclave non-functional.

Software Attestation Process

Manufacturer Root Key: The manufacturer has a unique root key embedded in the hardware.

Attestation Keys: Each device processor has an embedded attestation key (private and public) from the manufacturer.

Endorsement Certificate: The manufacturer certifies the public attestation key, indicating it is bound to a tamper-resistant hardware chip.

Verifier Interaction: The verifier sends a message to the TEE, which includes the required attestation.

Attestation Response: The TEE responds with the attestation key and the signed message.

Verification: The verifier checks the attestation by verifying the hash (key+message) with the attestation key. If accepted, the verifier trusts the attestation.

Chain of Trust in Software Attestation

The measurement in the context of TEEs is a cryptographic hash produced from the code and data inside the enclave. The measurement is included in the attestation signature to prove that the enclave was not tampered with. The verifier can then check the measurement against a known good value to ensure the enclave's integrity. Finally, the TEE produces the attestation signature containing the measurement plus other attestation data and sends it back to the verifier. The verifier can then use this attestation signature to verify the integrity of the enclave. In some cases, the verifier shares a secret key that can be used to establish a secure communication channel between the TEE and the verifier.

Main Hardware-Assisted TEEs

Intel SGX

AMD TrustZone

Arm TrustZone

Apple Secure Enclave

NVIDIA Backed Authentication

Attack Vectors

Despite the robust security guarantees provided by Trusted Execution Environments (TEEs) through isolation and attestation from a trusted manufacturer, research has shown that TEEs are still vulnerable to several types of attacks:

Side-Channel Attacks: Side-channel attacks exploit indirect information leakage from the TEE, such as timing, power consumption, electromagnetic emissions, or memory access patterns. Examples include:

Timing Side-Channel Attacks: These attacks measure the time taken to execute certain operations to infer sensitive information. Timing fluctuations caused by operations like multiplication and division can be exploited to obtain encryption keys. Studies have shown that timing attacks are one of the most damaging side-channel attacks, particularly in cryptographic implementations. Research by Fei et al. (2021) provides detailed insights into these vulnerabilities.

Memory Side-Channel Attacks: These attacks monitor memory access patterns to deduce the data being processed. Memory-based side-channel attacks observe events on shared resources in the memory hierarchy, such as cache hits and misses, to infer secret-dependent memory access patterns. Research has demonstrated the effectiveness of these attacks on both CPUs and GPUs. Studies by Sasy et al. (2017) highlight the risks associated with these attacks.

Network Side-Channel Attacks: These attacks analyze network traffic patterns to extract confidential information. Network side-channel attacks exploit the correlation between network traffic and the operations being performed within the TEE. Studies have highlighted the risks associated with these attacks in various networked environments. Research by Costan and Devadas (2016) discusses these vulnerabilities in detail.

Replay Attacks: Replay attacks involve intercepting and retransmitting valid data to create unauthorized effects. For instance, an attacker might replace a newer message with an older one from the same sender, effectively resetting the state of a computation. Research by Bellare et al. (2000) and Mo et al. (2023) provide comprehensive studies on these attacks.

Host-Based Attacks: Host-based attacks exploit vulnerabilities arising from the interactions between the host operating system (OS), user-space processes, and the TEEs running on the same system. Examples include:

Data Poisoning: Malicious data is injected into the system to corrupt the training process of machine learning models. Research by Mo et al. (2023) and Liu et al. (2021) provide detailed analyses of these attacks.

Adversarial Examples: Inputs are crafted to deceive machine learning models into making incorrect predictions. Studies by Mo et al. (2023) and Liu et al. (2021) discuss these vulnerabilities.

Access Pattern Exploitation: Previous research has demonstrated methods to exploit access patterns to TEEs to classify encrypted inputs with high accuracy. This type of attack can reveal sensitive information about the data being processed within the TEE. Grover et al. (2018) and Zhang et al. (2021) provide detailed insights into these vulnerabilities.

These vulnerabilities highlight the need for continuous research and development to get the security of TEEs ready for untrusted peer-to-peer networks. Below, we discuss how TEEs can be used for privacy-preserving machine learning (PPML), especially for inference or model training.

Inference in TEEs

In order to perform inference inside TEEs, the data providers and the model provider first need to prepare their corresponding dataset and model. They encrypt this information, to keep it safe during transit.

Next, a remote attestation ceremony takes place. The data provider, model provider, and the TEE engage in a key exchange process described above. They verify each other's identities, exchange keys and establish a secure communication channel.

With trust established, the encrypted dataset and model are transferred into the TEE. Inside the TEE they are decrypted.

Now, the inference begins. The TEE executes the inference process, analyzing the data using the model while ensuring secure isolation. The inference results, computed labels, are encrypted before being returned to the data provider. The model, having served its purpose, can be discarded. The figure below shows the entire inference process.

Some implementations of this ML inference process include:

Occlumency. A system that leverages Intel SGX to preserve the confidentiality and integrity of user data throughout the entire deep learning inference process (Lee et al. 2019).

Branchy-TEE. A framework that dynamically loads the inference network into the TEE on-demand, based on an early-exit mechanism to break the hardware performance bottleneck of the TEE (Wang et al. 2019).

Origami. A system that provides privacy-preserving inference for large deep neural network models through a combination of enclave cryptographic blinding and accelerator-based computation (Giri Nara et al. 2019).

Training in TEEs

In order to train a model, a data provider needs to load its dataset and an initial model describing the architecture of the network that is going to be trained.

Similarly as for inference, first we encrypt the dataset and the initial model. Then, remote attestation and key exchange takes place between the data provider and the TEE and a secure communications channel is created. Through this channel, the dataset, the initial model and the training algorithm are loaded into the TEE. Then, the TEE executes the training algorithm isolated from any external influence. After a given number of epochs, the weights are returned to the data owner through the secure channel.

Some implementations of ML training are:

TensorSCONE. A secure TensorFlow framework using Intel SGX, which enables the training and usage of TensorFlow models within a TEE (Kunkel et al. 2019).

Graphcore IPUs. Graphcore's IPU Trusted Extensions (ITX) provide a TEE for AI accelerators, ensuring strong confidentiality and integrity guarantees with lower performance overheads for training (Vaswani et al. 2022).

Citadel. This is an ML system that protects both data and model privacy using Intel SGX. Citadel performs distributed training across multiple training enclaves for the data owner, and an aggregator enclave for the model owner. It uses Zero-sum masking for secure aggregation between training enclaves, which is a technique where data owners collectively generate masks and apply them to their individual updates before sending them to the aggregator (Bonawitz et al. 2017). The authors of Citadel show that increasing the number of training enclaves from 1 to 32 results in increased throughput of 4.7X - 19.6X (Zhang et al. 2021).

Pros of TEEs

The advantages of using TEE with neural networks are:

Confidential Execution. The ML inference and training process is executed within the TEE. The TEE ensures that the data and model are protected from any external access or tampering during the inference process.

Isolation. The TEE isolates the inference and training process from the rest of the system, ensuring that even if the host system is compromised, the data and model remain secure.

Cons of TEEs

The disadvantages of using TEE with neural networks are:

Requires trust on the manufacturer. In the attestation process, a certificate of the public key from the manufacture is required who works as a certificate authority. In a peer-to-peer network setting, trust is an undesirable property (Mo et al. 2023).

Restricted resources. TEEs offer restricted computation resources inside their secure enclave. This forces ML developers to find clever ways to implement ML tasks, for example, efficient partition of ML processes (cf. Mo et al. (2021); Liu et al. (2021)).

ML inside TEEs are still begin attacked. The ML process is still vulnerable. Previous research conducted by Grover et al. (2018) demonstrated methods to exploit access patterns to TEEs in order to classify encrypted inputs with high accuracy.

Vulnerable interactions with the outside. This refers to a type of security attack known as "Host-based Attacks" in the context of TEEs. These attacks exploit vulnerabilities arising from the interactions between the host operating system (OS), user-space processes, and the TEEs running on the same system. It includes data poisoning and adversarial examples (Mo et al. 2023).

SDK support. Most TEEs only provide basic low-level SDK, and therefore, it is hard to port all ML dependencies into TEEs. Hence, porting an ML code still requires a lot of code refactoring (Mo et al. 2023).

Large TCB size due to libOS use. The Trusted Computing Base (TCB) is the set of hardware and software components that are critical to the security of a TEE system. A library operating system (libOS) is a lightweight operating system that provides application-level abstractions without the need for a full operating system kernel. A libOS can be used to easily port ML applications into a TEE, but such port results in a huge TCB size, which can lead to a reduced security (Mo et al. 2023).

Secure Multiparty Computation (MPC)

Secure Multiparty Computation (MPC) is a cryptographic technique that allows multiple parties to jointly compute a function over their private inputs without revealing those inputs to each other. The key idea behind MPC is to divide the computation into smaller steps, where each party performs a portion of the computation (Lindell 2020).

How It Works

The process usually involves the following steps:

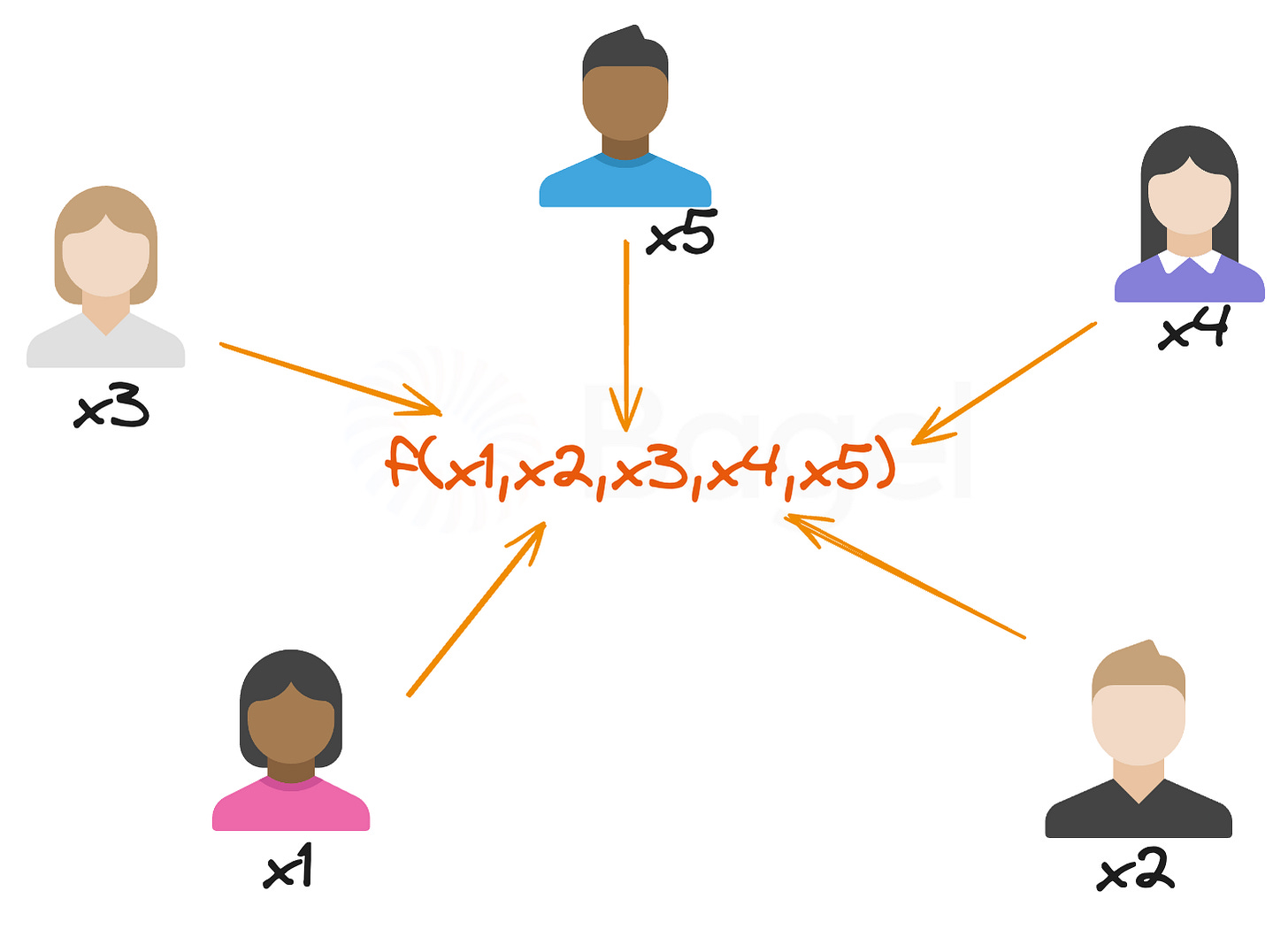

Function Agreement: All parties agree on the function f to be computed, as shown in the image: f(x1, x2, ..., xn).

Input Sharing: Each party provides its private input (x1, x2, x3, x4, x5 in the image) but keeps it hidden from others.

Protocol Execution: The parties interact through a carefully designed protocol to compute the output. This protocol ensures that no party learns anything about the other parties' inputs beyond what is revealed by the output y = f(x1, x2, ..., xn).

Output Computation: The final output y is computed and revealed to all parties.

The key properties of MPC protocols are:

Privacy: No information about any party's input x is revealed beyond what the output y inherently reveals.

Correctness: Each party is guaranteed that the output is correctly computed from the inputs according to the function f, even if some parties deviate from the protocol.

Independence of inputs: Parties choose inputs freely.

Guaranteed output delivery: All parties get the output.

Fairness: Bad actors can't gain an edge (Lindell 2020).

Privacy and correctness are two basic properties that any MPC protocol should support. For stronger security, protocols should also ensure independence of inputs, guaranteed output delivery, and fairness to all parties.

Common Schemes in MPC

Secret Sharing: One common technique used in MPC is secret sharing. Discovered independently by Shamir (1979) and Blakley (1979). In secret sharing, a secret is divided into multiple shares, and each share is distributed to different parties. No single share reveals any information about the secret, but when combined, the shares can reconstruct the secret. Shamir's Secret Sharing is a well-known method where a secret is represented as a polynomial, and shares are points on this polynomial. The secret can be reconstructed using Lagrange interpolation.

Garbled Circuits: Another technique is Garbled Circuits, discovered by Yao (1986), which is used for two-party computations. In this method, one party (the garbler) creates an encrypted version of the function (the garbled circuit) and sends it to the other party (the evaluator). The evaluator uses its input and the garbled circuit to compute the output without learning the other party's input. This method ensures that the computation is secure and private.

Oblivious Transfer: Oblivious transfer is a fundamental building block in many MPC protocols. It allows a sender to send one of many possible messages to a receiver, but the sender does not know which message was received. This technique is crucial for ensuring that parties do not learn more information than they are supposed to.

Common Applications

Data Analytics: MPC enables joint data analysis while keeping inputs private. This is useful in healthcare, finance, and marketing where privacy is crucial. Companies can perform secure statistical analysis on combined datasets without exposing individual records (Zhou et al. 2019).

Auctions and Biddings: MPC can conduct auctions where bids remain private, only revealing the winning bid price. This enhances fairness and privacy in auctions (Bogetoft et al. 2006).

Fraud Detection: By securely analyzing combined transaction data from multiple sources using MPC, fraud detection algorithms can operate on a broader dataset while preserving individual transaction privacy (Nielsen 2021).

Below, we will review how to use MPC to perform inference and training in machine learning in a privacy preserving manner using secret-sharing. The main idea is that data and model are shared and computations occur on shares, not raw data.

MPC in Inference

In order to explain how inference is done using MPC, we need to introduce some basics on secret sharing. We present here a definition by Evans et al. (2017). A (t,n)-secret sharing scheme splits a secret s into n shares in a way that t-1 shares reveal no information about the secret s, while t or more shares allow reconstruction of s. In a two-party secret sharing scheme we have t=n=2. In the following we will explain a simple approach for inference given by Li et al. (2022) in the context of transformer models.

Similarly to zkML and FHE, where all operations of a neural network must be translated into arithmetic operations, we use a secret sharing scheme for computing additions and multiplications. Let us consider two parties, a data provider with an data input x, and a model provider with a model input y consisting of all weights in the model.

To compute additions, the data provider splits x into two shares x1 and x2 with x=x1+x2 and the model provider splits y into y1 and y2 with y=y1+y2. The data provider keeps x1 and y1 and the model provider keeps x2 and y2. This way, neither can reconstruct the original inputs x and y. Then the data provider computes D=x1+y1 and the model provider computes M=x2+y2. The reconstruction process then results in D+M=x+y.

To compute multiplications, the parties can use a secure protocol based on Beaver triples. Both parties compute the shares x1,x2,y1,y2 and the data provider gets x1,y1 and the model provider gets x2,y2. Now a Beaver triple is generated c=ab using oblivious transfer or homomorphic encryption and a,b,c are secret shared with the data provider receiving a1,b1,c1 and the model provider receiving a2,b2,c2. Now the data provider computes ϵ1=x1-a1 and δ1=y1-b1 and the model provider computes ϵ2=x2-a2 and δ2=y2-b2. They communicate these numbers and reconstruct ϵ=ϵ1+ϵ2 and δ=δ1+δ2. Then the data provider can compute r1=c1+ϵ·b1+δ·a1+δ·ϵ and the model provider can compute r2=c2+ϵ·b2+δ·a2+δ·ϵ. The multiplication result is then given by xy=r1+r2, which is an addition operation that can be jointly computed using the protocol of the previous paragraph.

The implementation of more complex operations, like comparisons, can benefit from 3-party MPC as shown by Dong et al. (2023).

MPC in Training

For the case of training with MPC we assume we have two or more data providers that would like to keep their inputs secret and they would like to jointly compute the weights of a model. A secret-sharing scheme was used by Liu et al. (2021) where they showed how to construct an efficient n-party protocol for secure neural network training that can provide security for all honest participants even when a majority of the parties are malicious.

Similarly as in the case for inference, all operations in the architecture of the neural network must be performed using arithmetic operations, that is, additions and multiplications.

In the case of a 2-party protocol, for each private value x, the data provider must split it into shares x1,x2 and it gives x2 to the other data provider. Similarly as in the inference case, a Beaver triple is computed and shared between each data provider. In general, for n data providers, each input is partitioned into n shares.

At the end of training, a model is learned and shared between the data provider and the model trainer.

Pros of MPC

The advantages of using MPC with neural networks are:

Joint privacy-preserving data analysis. MPC allows multiple parties to collaboratively analyze data without revealing their individual datasets. This is particularly valuable in industries like healthcare, finance, and marketing, where sensitive data must be protected.

Secure Machine Learning Model Training. MPC can be used to train machine learning models on data from multiple sources without exposing the individual datasets. This is crucial in scenarios where data owners are reluctant to share their data due to privacy concerns or legal restrictions.

Cons of MPC

The disadvantages of using MPC with neural networks are:

Trust. While MPC protocols aim to provide trustless computation, their deployment in real-world systems introduces additional trust assumptions and practical considerations (Lindell et al. 2023).

Complexity. Developing and deploying MPC solutions can be complex. It requires expertise in cryptography, secure protocol design, and distributed systems. Ensuring the correctness and security of the MPC protocol itself is essential, as any vulnerabilities could compromise the privacy of the data being processed (Liu et al. 2021).

TLDR

In Part 1, we discussed the four main privacy-preserving machine learning techniques: differential privacy (DP), zero-knowledge machine learning (ZKML), federated learning (FL), and fully homomorphic encryption (FHE). We assessed these techniques based on data privacy, model algorithm privacy, model weights privacy, and verifiability.

In this Part 2, we looked into two other popular privacy-enhancing technologies: trusted execution environments (TEEs) and secure multiparty computation (MPC).

TEEs allow for isolated and verifiable code execution in protected memory, safeguarding code and data from external threats. However, TEEs require trust in the manufacturer and introduce a single point of failure, which is not suitable for trustless and peer-to-peer (P2P) networks.

MPC enables multiple parties to compute on shared data while keeping inputs private. It facilitates joint privacy-preserving data analysis and secure machine learning model training without exposing individual datasets. However, their deployment in real-world systems introduces trust assumptions.

The table below summarizes our findings—see part 1 for the definitions of each property.

Privacy is essential for AI safety and the Cambrian explosion of growth in the industry. Bagel's lab is eliminating one of the biggest bottlenecks by developing novel privacy-preserving machine learning schemes, addressing current limitations discussed above. These schemes will sit at the heart of the neutral and open machine learning ecosystem we're building.

And eventually, we will open-source this crucial research for the greater good of the open-source AI ecosystem.

Bagel is a deep machine learning and cryptography research lab. Making Open Source AI monetizable using cryptography.

@Bidhan Roy – Your work on privacy-preserving AI is critical. But privacy alone isn’t enough—what about truth preservation?

In a world where AI models can be manipulated at scale, how do we ensure that great data doesn’t become great deception?

I’ve been developing a system designed not just for privacy, but for AI truth governance—resistant to manipulation, censorship, and external corruption. If privacy is one pillar of responsible AI, truth is the foundation.

Would love to connect and discuss if Bagel is open to tackling this challenge. Let’s talk.

Please reach lit to me all ways possible thanks 😊